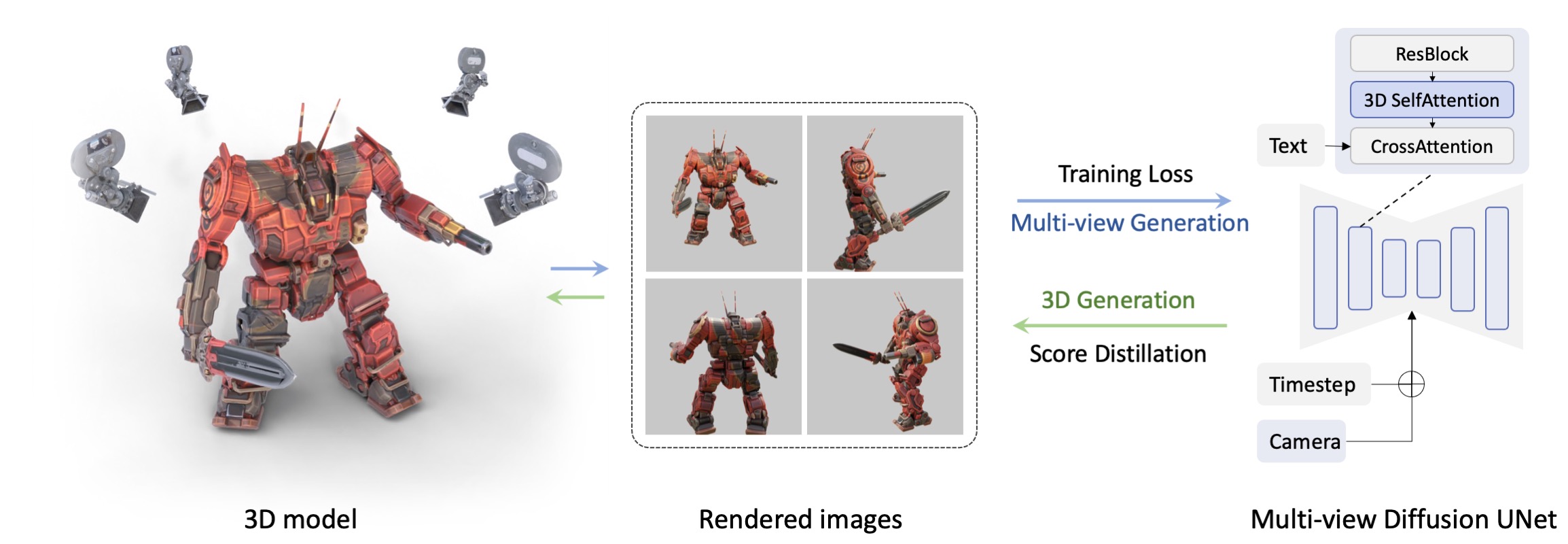

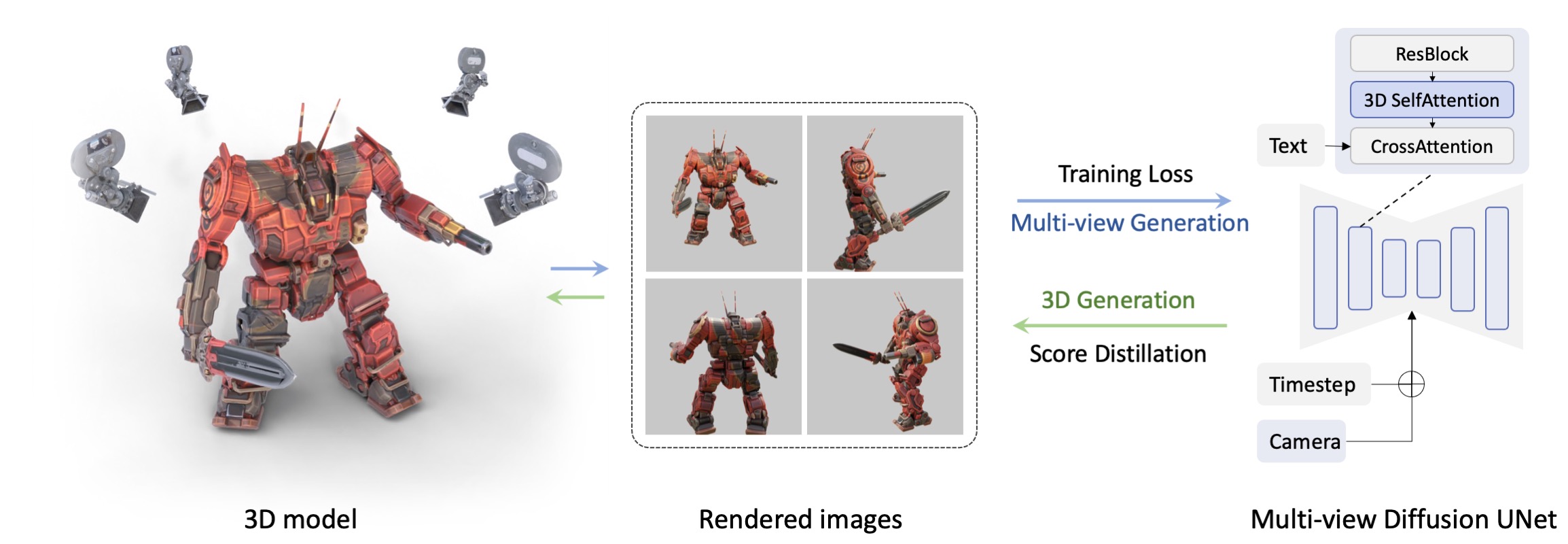

We introduce MVDream, a multi-view diffusion model that is able to generate consistent multi-view images from a given text prompt. Learning from both 2D and 3D data, a multi-view diffusion model can achieve the generalizability of 2D diffusion models and the consistency of 3D renderings. We demonstrate that such a multi-view prior can serve as a generalizable 3D prior that is agnostic to 3D representations. It can be applied to 3D generation via Score Distillation Sampling, significantly enhancing the consistency and stability of existing 2D-lifting methods. It can also learn new concepts from a few 2D examples, akin to DreamBooth, but for 3D generation.

Our multi-view diffusion model can be applied as a 3D prior to 3D Generation with Score Distillation.

MVDream generates objects and scenes in a multi-view consistent way.

We collected 40 prompts from different sources to compare with other text-to-3D methods. A fixed default configuration is used for all prompts without hyper-paramter tuning with threestudio.

Dreamfusion-IF

Magic3D-IF-SD

Text2Mesh-IF

ProlificDreamer

Ours

an astronaut riding a horse

baby yoda in the style of Mormookiee

Handpainted watercolor windmill, hand-painted

Darth Vader helmet, highly detailed

Like Dreambooth3D, multi-view diffusion model can be trained with few-shot data of the same subject for personalized generation with a much simpler strategy.

Left: "Photo of a [v] dog"

@article{shi2023MVDream,

author = {Shi, Yichun and Wang, Peng and Ye, Jianglong and Mai, Long and Li, Kejie and Yang, Xiao},

title = {MVDream: Multi-view Diffusion for 3D Generation},

journal = {arXiv:2308.16512},

year = {2023},

}